Jim Krause | Classes | P351 Video Field & Post Production

Week 12

Misc Announcements

- No in-person Lab this week. However, look at the Color Correction and Grading Exercise (due by the end of next week).

- Next week we'll screen Drama/Storytelling ALT video projects and finish a Color Correction/Grading Exercise

- Only 3 weeks left in the semester.

- We'll watch Final Projects during our week 15 lab time.

- FINAL EXAM: The Fall 2022 Final Exam is 12:40 – 2:40 PM, Friday, December 16. (Day & time assigned by registrar.) The Final Exam will be administered via Canvas (so you can take it in the comfort of your home).

Agenda:

- Finish up legal issues

- Video codecs (cont.)

- Format Conversion (including 3:2 pull down, etc)

- Digital Video & HD Broadcasting

- Metadata (timecode, closed and open captioning)

Codec vs. Container (aka Wrapper) --------------

Know the difference between a container (E.g. Quicktime or Windows Media) and a codec (E.g. ProRes or H.264).

Containers (also known as wrappers) are designed to be multipurpose- serving a variety of different users with different needs. Some popular containers include:

- MOV (Apple)

- AVI (Audio Video Interleave) This container has limitations and has largely been replaced by ASF.

- ASF (Advanced Systems Format) & WMV (Windows Media Viewer)

- MXF (Material Exchange Format) often used in the form of OP1a or OPAtom (Operational Pattern)

- Comparison of Video Container Formats(Wikipedia)

Codec is an acronym that stands for coder/decoder. A codec is a method for coding/compressing and decoding/decompressing digital information. It can use specialized hardware, software or a combination of both.

A single type of container (E.g., mov) can support many different codecs.

When you see a _____.mov it could be anything from an AIFF audio file to a feature film in ProRes HQ. All you know from the .mov is that it's a Quicktime Movie. You need to take a closer look at the file in order to see what it really is.

Do you know how to determine what the codec is in QuickTime Player?

- H.265 vs H.264 Compression Explained! (This has a good explanation of interframe compression.)

Lossless (Uncompressed) verses Compressed

Shooting RAW (aka lossless or uncompressed) provides the highest quality, but at very high data rates. These are usually too large for internal recording onto an SDXC or CF card. Thus, external recorders like an Atomos Shogun are used. These look just like monitors and can be attached to a camera. In addition to recording challenges, uncompressed data rates are too large and cumbersome for most to incomporate into their production workflows. Because of this, most professionals opt to use a format with some form of compression.

Which Codec is Best?

Videographers have more potential production codecs on hand than ever before. Some codecs are optimized for efficient capturing and distribution (E.g. H.264 & H.265). Others provide for better color depth and are well suited for editing. Only the highest end video is uncompressed (E.g. RAW or 4:4:4). The drives in most personal computers are incapable of recording and playing back multiple streams of uncompressed video. Almost all video uses some sort of compression. It's the only way we can reasonably store it and edit it.

The more we compress the file, the more quality we lose.

Essentially the highest-quality codecs come with a price: a higher bit-rate gives you better quality but requires more storage space and greater bandwidth.

Most DSLRs capture video in AVC/H.264 or HEVC/H.265. While an efficient acquisition codec, it is NOT optimized for editing tasks such as color correction. This is why sometimes it's a good idea to convert them to something else before you edit. If you are editing on an Avid, you might want to convert tme to DNxHD. If you're editing on an Apple with Premiere or Final Cut you might want to use Apple ProRes.

Here's a short video on codecs and their key parameters. (6:21)

Intra-frame vs. Inter-frame (GOP)-based codecs

Here's a good explanation from Videomaker.

Intra-Frame - Codecs like DV, DNxHD, and Apple ProRes compress and treat every frame individually. These are known as intra-frame codecs. Intra-frame codecs are sometimes referred to as spatially-compressed and generally take up more room than a inter-frame (GOP) based codec, but they are often a better choice for editing.

Examples of intra-frame codecs include:

- Apple ProRes

- Avid DNX codecs (and SD AVR codecs)

- DV

- DVCProHD

- H.264 (all I frame)

- Panasonic D5

Inter-frame - GOP-based codecs such as MPEG-2 (used in HDV and SD DVD-Video) break the image down into macro-blocks and compress over time as well as spatially. These are known as inter-frame or temporal codecs. The important thing to understand about interframe compression is that it compresses over time as well as space. In inter-frame compression we divide the picture into smaller rectangles called macroblocks. These macroblocks are compressed and tracked over time and placed into a GOP (Group of Pictures). These GOP-based formats use I, P & B frames.

Examples of inter-frame codecs include:

- HDV (MPEG-2)

- XDCAM (MPEG-2)

- MPEG-4

- H.264/AVC

- H.265/HEVC

LikakiPhotos, CC BY-SA 3.0 via Wikimedia Commons

Generally speaking, intra-frame codecs are easier for non-linear editors to process. Inter-frame codecs such as HDV do a nice job of compression, but the GOP-based structure is more taxing for non-linear editing systems.

Some popular video codecs:

- Apple Pro-Res (variable compression, Intraframe codec)

- Avid DNxHD (variable compression, intraframe codec)

- DV - Uses 5:1 compression Other variants of DV include DVCAM (Sony) and DVCPRO (Panasonic).

- H.261 & H.263 - Video-conferencing codecs

- H.264 - AVC (Advanced Video Coding) A version of MPEG-4 used for mass distribution and Blu-ray

- H.265 - HEVC (High efficiency Video Coding)

- MPEG - (Moving Picture Experts Group) uses interframe

compression and can store audio, video, and data. The MPEG standard was

originally divided into four different types, MPEG-1 through MPEG-4.

- MPEG-2 is widely used and is found in standard definition DVD-Video and in HDV.

- MPEG-4 is a good all-purpose multimedia codec. The H.264 variant is also used in Blu-ray HD DVDs.

- XDCam – Developed by Sony

- Redcode

- SMPTE VC-1 - Developed by Microsoft for Blu-ray authoring

- Sorenson – Well supported by a number of platforms.

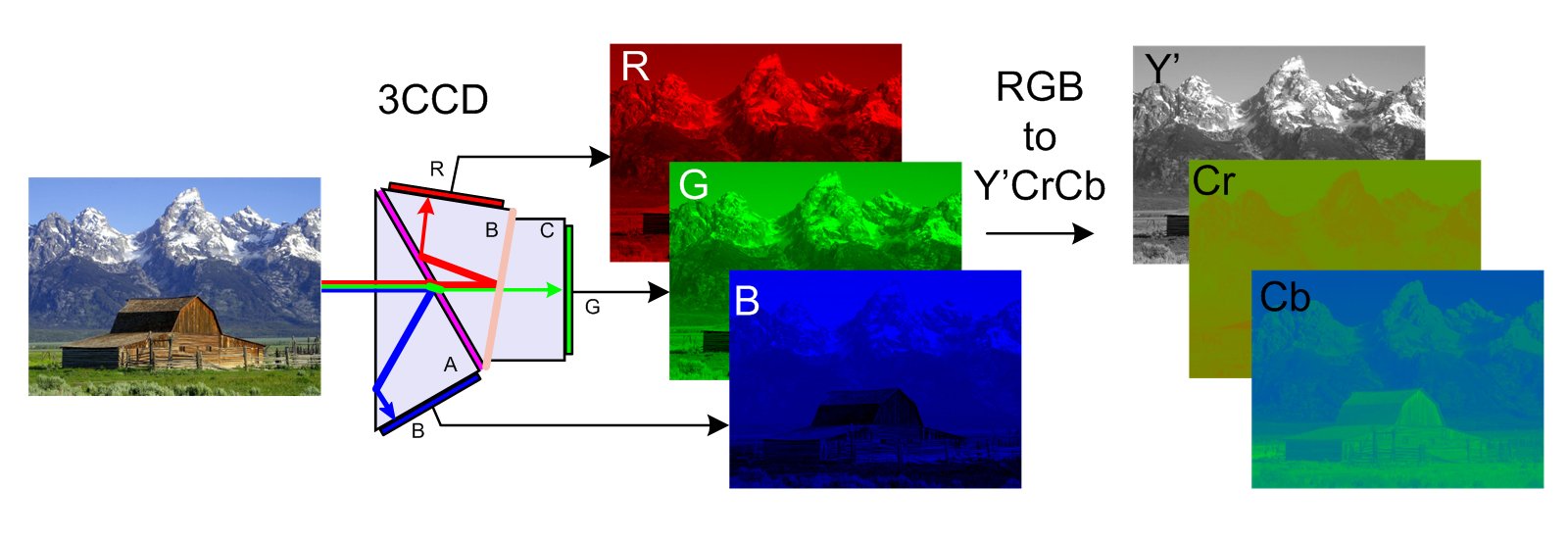

Digital Color Space

Video cameras capture in RGB but we usually convert to a different (more efficient) color space before broadcasting or editing. RGB refers to uncompressed video. Y,Cb,Cr is a an alternate and more efficient color space. It's how most professional video is processed. Digital color component signals can be expressed as R-Y, B-Y or Cr, Cb.

Y, Cb, Cr Color Space: TV uses an additive color system based on RGB as the primary colors. Well if the RGB data were stored as three separate signals (plus sync) it would take a lot of room to store all the information. Fortunately some great technical minds figured out a way pack this information into a smaller box (figuratively speaking) devising a way to convert the RGB information into a different color space that takes up less room, with minimal loss in perceived picture quality. The luminance and two color difference signals and are typically represented by Y, Cb, Cr.

Combining the RGB signals according to the original NTSC broadcast system standards creates a monochrome luminance signal (Y). So you can basically pull out the blue and red signals and subtract them from the total luminance to get the green info.

So instead of three uncompressed color signals (R G B) we can process video as a luminance signal and 2 digital color component signals (Y Cb Cr)

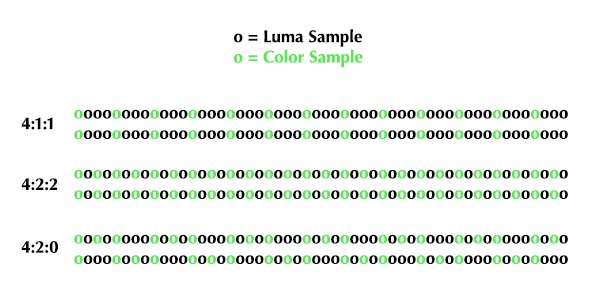

Chroma Sampling (4:4:4, 4:2:2, 4:2:0, and 4:1:1)

Digital technology provides mutiple color sampling methods including, 4:4:4, 4:2:2, 4:2:0, and 4:1:1.

These numbers refer to the ratio of luminance (Y) samples to the samples of the two color signals (Cb & Cr).

In video, the most important component is luminance as it gives us all the detail necessary to see the image. As a result, luminance is sampled at a very high rate (13.5 Megahertz).

Given that the luminance portion is sampled at 13.5 MHz. Let's apply the before mentioned ratios: 4:2:2 and 4:1:1. In a 4:1:1 sampling systemd, the color information is sampled at 1/4 the luminance rate: 3.375MHz. In a 4:2:2 system, the color is sampled at 1/2 the rate of the luminance or 6.75MHz.

What about 4:2:0?

The 4:2:0 is used in MPEG-2 sampling. The two color difference signals are sampled on alternating lines.

Geek Speak videos:

- 10 bit 4:2:2 by Griffin Hammond (2:50)

- Chroma Subsampling by mimoLive (5:25)

- Ask Alex (4:2:2 vs 4:1:1) (4:51)

- 10-bit 4:2:2 (Panasonic GH5)

Transcoding is when we convert from one codec to another.

Converting 24p video & film to interlaced 60i video -----------------------------------

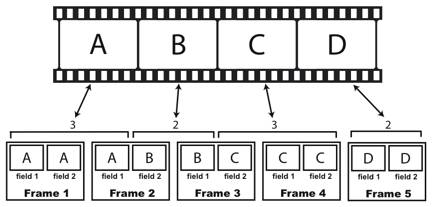

When converting film or 24p video to 30/60i (29.97) video we can use a 3:2 Pulldown. (Alternatively, we can also use a 2:3 pulldown.)

Film and many progressive video formats run at 24 frames per second (24p). 24p refers to video shot at 24 frames per second progressive- that means there are no fields.

Since film runs at 24 fps and video runs about 30 interlaced fps, the two aren't directly interchangeable on a frame for frame basis. (To be more precise, 23.976 film frames become 29.97 video frames.) In order to transfer film to 30 fps video, the film frames must be precisely sequenced into a combination of video frames and fields.

A telecine is a piece of hardware containing a film projector sequenced with a video capture system. The telecine process is a term used to describe the process of converting film to video, also called a 3 2 pulldown. In the 3-2 pulldown each frame of film gets converted to 2 or 3 fields of video.

Note how four (24p fps) frames are converted to five interlaced frames (30i fps).

The problem with converting film frames to fields, is that some video frames have fields from two different film frames. If you think about it you'll see that this can present all types of problems.

Metadata ------------------------------------------------------------------------------

Metadata (data about the data) is embedded text and numeric information about the clip or program. It can include, but is not limited to:

- timecode

- camera

- closed captions

- exposure

- gain

- clip name

- running time / duration

- latitude/longitude

- audio levels

- DRM (digital rights management)

Sometimes file formats don't support metadata. In these cases the data is written to a separate sidecar file, which can be in the form of an XMP (Extensible Metadata Platform, based on XML).

Note: It's important to maintain the file/directory structure created by clip-based cameras, as important information (such as timecode) is often stored in a separate sidecar file. These files are often invisible to file browsers, so be sure to move or copy media from the top/root directory of the SD, CF, or P2 card.

Cameras can automatically create certain types of metadata. You can also add custom metadata types. For example you can create a library of video clips where you tag custom fields such as:

- INT / EXT

- Subject (horse, landscape, cloud, mountains, etc.)

- Shot type (ECU, CU, MACRO, etc.)

Closed & Open Captions

Closed Captions are on-screen text that can be turned on and off by the viewer, whether they are watching TV, YouTube, or facebook video. It's required by the FCC that all stations broadcast programming with closed captioning data for the hearing impaired. If you watch closed-captionined programming, you'll see a variety of variations in readability, placement and duration. You can't change the formatting, which is controlled by the playback platform.

Closed captions are a form of metadata.

Open Captions are also on-screen text, but they are embedded into the video and can't be turned off. It is possible to change the formatting and screen position. You likely have seen these in older foreign movies with subtitles.

Since open captions are enbedded into the video, they aren't technically metadata (but could be coupled with closed captions/metadata).

The process of creating Closed or Open Captions is similar and can be done with Premiere or Media Composer.

Here's a video tutorial on making Open or Closed Captions within Premiere.

Vocabulary (Know these terms)

- Closed Captioning

- Codec

- Container/Wrapper

- GOP (I, P & B frames)

- inter-frame (temporal) compression

- intra-frame (spatial) compression

- Macroblock

- Metadata

- Sidecar file

- Telecine

- Transcode