Jim Krause | Classes | P351 Video Field & Post Production

Summer 2018 Week 5 - Tuesday

Agenda / Reality check

- This week:

- Cover new material & review Drama/Storytelling Projects.

- For the Drama/Storytelling critiques, I'm interested to read how the group logistics/dynamics worked.

- No lab Thursday. The time is dedicated for you to work on Final Projects. Aim to have a rough cut of your Final Project by Friday. This will give you time to refine your project (think color correction, graphics, & sound design)

- The Multimedia Exercise, is due by Thursday, June 7. I'll cover exporting for this today.

- Next week (week 6) is the last week of class!

- Final Quiz will be next Tuesday (6/13). The lab will be devoted to editing

- Wednesday - View Final Projects. No class Thursday.

Lecture & lab today:

- Timecode (understand difference between DF & NDF, Free Run & Record Run)

- Codecs & Multimedia Wrappers/Contaners

- Format / Frame rate conversion

- Digital Video & High Definition Broadcasting

- Metadata & subtitles

- 2K, 4K & Ultra HD

- Resources for Post-production

- Final Exam Review

Readings:

- Cybercollege unit 16 (Waveform monitors and vectorscopes)

- Cybercollege DTV standards

- Also check out the embedded links in the text below (not on quiz)

NOTE: Some are doing good production work but are not getting a good grade due to missing paperwork.

Final Projects: review criteria

All of your written materials (except for storyboards) should be typed. The amount of detail along with the appearance and presentation of your materials & packet affects your grade.

- These were due last week, but I extended the time for some of you.

- Yes, you CAN script feature stories and documentaries. Scripts are expected for all projects.

- Talent release forms can be found on the T351 website.

Codec vs. Container (aka Wrapper) --------------

Know the difference between a container (E.g. Quicktime or Windows Media) and a codec (E.g. ProRes or H.264).

Containers (also known as multimedia wrappers) are designed to be multipurpose- serving a variety of different users with different needs. A few popular containers include:

Codec is an acronym that stands for coder/decoder or compressor/decompressor. A codec is a method for compressing and decompressing digital information. It can use specialized hardware, software or a combination of both.

Containers support a variety of different codecs.

When you see a _____.mov it could be anything from an AIFF audio file to a feature film in ProRes HQ. All you know from the .mov is that it's a Quicktime Movie. You need to take a closer look at the file in order to see what it really is.

Who knows how to determine what the codec is in Quicktime Player?

Here's a pretty good explanation of the difference between containers and codecs from Videomaker.

In order to play back multimedia files, you need the matching player, which is sometimes refered to as a "component". For instance if you have a new PC with a fresh version of Vista, you'll need to buy the MPEG-2 decoder component in order to play back DVDs.

Which Codec is Best?

Videographers have more potential production codecs on hand than ever before. Some codecs are optimized for efficient field capture or distribution (E.g. H.264). Others provide for better color depth and editing. (ProRes 4:2:2) Only the highest end video is uncompressed. Almost all video uses some sort of compression. It's the only way we can reasonably store it and edit it.

The more we compress the fle, the more quality we lose.

Essentially the highest-quality codecs come with a price: a higher bit-rate gives you better quality but requires more storage space.

DSLRs often capture video in H.264. While an efficient acquisition codec, it is NOT optimized for editing. This is why sometimes it's a good idea to convert theme to something else before you edit. If you are editing on an Avid, you might want to convert tme to DNxHD. If you're editing on an Apple with Premiere or Final Cut you might want to use Apple ProRes.

Video Codecs - Interframe verses Intraframe (review)

Only the highest end video is uncompressed. Almost all video (especially HD) uses some sort of compression. When looking at the characteristics of various video recording gear, it's important to understand the basic differences between two general types of compression.

Intraframe compression - This is where we take each individual frame and squeeze it so it all fits onto tape or disk. Time-lapse image sequences are one example. Other examples of intraframe codecs include:

- Apple ProRes

- Avid codecs (DNxHD)

- DV

- DVCProHD

- Panasonic D5

interframe or Group of Pictures (GOP) compression - Interframe compression compresses over time as well as space. In intraframe compression we divide the picture into smaller rectangles called macroblocks. These macroblocks are compressed and tracked over time and placed into a GOP (Group of Pictures) Examples of interframe codecs include:

- HDV (MPEG-2)

- XDCAM (MPEG-2)

- MPEG-4

- H.264

Interframe compression is very effective and it's scalable (we can make the frame dimensions of varying sizes (720 x 480, 1440 x 1080 etc.). The down side is that GOPs can be difficult to edit. Deconstructing the GOPs during the edit process tasks the computers to a greater degree than intraframe codecs.

If one is editing large amounts of Interframe/GOP footage, it's often best to transcode to an intraframe codec.

Chroma Sampling (a.k.a. Chroma Subsampling)

First one must understand that our video signal is not RGB but Y/Cr/Cb, which is a type of color space used in digital video and photography. RGB has too high a data rate for all but the highest end applications.

It's possible to work in true RGB, but Y/Cb/Cr, also knowns as the color difference system is much more efficient and commonly used. It uses less data and still looks good.

In the color difference or Y,Cb,Cr color space we have three components:

- Y = luma or luminance component

- Cb = blue difference

- Cr = Red difference

The meaning of: 4:4:4 / 4:2:2 / 4:1:1 / 4:2:0

What do the numbers refer to? Quite simply, the ratio of luminance to chrominance samples.

- Luma: brightness

- Chroma: Color

The first number (4) represents the number of luminance (Y) samples. The second two numbers represent the number of samples for the two color difference signals (Cb, Cr).

4:4:4 - This means there is no chroma subsampling and can refer to uncompressed RGB. It has too high a data rate for all but the highest end applications. (Uses: Sony HDCAM SR)

4:2:2 - The two color difference components are sampled at half the sample rate of the luma component. (Uses: Digital Betacam, DVC-Pro HD, ProRes, XDCam 422, Canon MXF 422)

4:1:1 - The two color difference components are sampled at 1/4 of the luma sample. (Uses: NTSC DV, DVCAM)

4:2:0 - The two color difference components are sampled on alternate lines. Vertical color resolution is halved. (Uses: MPEG-2 sampling)

What does it all mean?

The color depth of a 4:2:2 component digital signal is twice that of a 4:1:1 signal. This means better color performance, particularly in areas such as special effects, chromakeying, alpha keying (transparencies) and computer generated graphics.

- Bit-Depth and Color Sampling: 8-bit vs 10-bit / 4:2:2 vs 4:2:2

- Ask Alex - Youtube Explanation (almost accurate)

Format Conversion -----------------------------

In working with video, we often need to convert:

- codec

- pixel dimension

- frame rate

Codec conversion - Converting from one codec to another is called transcoding. For example we might want to take our DSLR's H.264 footage and convert it to ProRes 4:2:2 for editing.

Pixel dimension conversion - Some cameras don't shoot in full HD pixel dimensions. For example XDCam and HDV can be captured at 1440x1080 pixels. When we bring it into our editing program, we may need to upconvert to 1920x1080 pixels. You might have 4K footage and downconvert to HD pixel dimensions of 1920x1080.

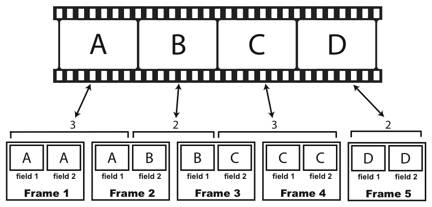

Frame rate Conversion - Theatrical projects are typically shot at 24 fps. In order to air on broadcast TV, they need to be converted to 60i or 30 fps. Changing the frame rate can be problematic as we are temporally altering the footage.

To convert film to video, we can use a telecine.

Telecine has two meanings: 1: a piece of hardware containing a film projector sequenced with a video capture system. 2: the process of converting film to video.

To convert 24p to 60i we typically use a 3:2 Pulldown. (Though it is possible to use a 2:3 or a 2:2:2:4 pulldown.) Note how 4 (24fps) frames are converted to 5 interlaced frames (30 fps) in the diagram below.

The problem with converting film frames to fields, is that some video frames have fields from two different film frames. If you think about it you'll see that this can present all types of problems.

Additional reading:

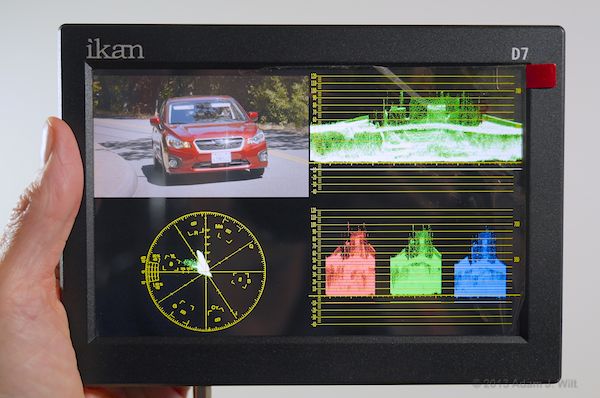

Dedicated Signal Monitoring

Professional editors used to have to spend $5,000-$10,000 for dedicated monitoring gear. Now it can be had for about $700 (+ the price of a PC) with Black Magic Design's Ultrascope. There are two versions: a PCI card version and a dongle which you can use with a laptop in the field. The display looks like this:

It provides:

- RGB Parade

- waveform monitor

- vectorscope

- histogram

- audio levels & spectrum

- video monitor

- error logging

Error logging is an interesting and useful feature. Essentially you can turn on the logging, start playing your footage, and go to lunch (let the entire program play and be logged). It records the errors and the exact time they happened.

Jim's Portable Edit Setup:

When I'm traveling light and need to edit, I rely on my MacBook Pro with a 2nd monitor. To monitor my video I use a small 7" monitor- the ikan D7w (pictured below). The reason I use this one is that it's got a built-in waveform monitor, and vectorscope. It has both HDMI and HD-SDI loop-through inputs and is large enough to provide critical info for focusing but small enough that it can be attached to a camera.

The ikan D7w Field monitor:

Premiere lets you add additional monitors. Once one is plugged in go to Premiere Preferences / Playback. You'll want to check the box under "video device" next to your monitor.

Under the "Window" menu you can choose "Reference Monitor" to open an additional monitor if needed.

2K, 4K, Ultra HD and beyond................

HD is great but there's something even better: 2K and 4K. Check out the wikipedia entry on it.

Here's a pretty good visual comparison of the various formats: http://www.manice.net/index.php/glossary/34-resolution-2k-4k

2K provides only slightly more information than HD. 2048 pixels per line compared with 1920. But the format was embraced by the digital cinema industry. The Phantom Menace introduced the world to Digital Cinema. Digital Cinema is not about production- but the distribution of theatrical content. Digital Cinematography refers to using a digital workflow to create films.

Most have ignored 2K and focused on 4K, which essentially provides 4 times the information as HD.

Just as HD comes in varying pixel dimensions for broadcast and recording 4K comes in different sizes as well. Most variations of 4K have 4096 pixels per line.

Want to shoot in 4K?

- Back Magic Designs and AJA both have popular 4K cameras

- The Sony F5 and FS7 cameras shoot on a super 35mm sensor

- The Sony AX1 camcorder (about $4500) uses a 1/2.3 CMOS sensor

- Sony a9 mirrorless DSLR (about $4,300) uses a full frame/35mm sensor

- Canon XC15 ($2,300) uses a 1" sensor

- Canon EOS C200 (about $8,400) uses a super 35mm CMOS sensor

- GoPro Hero 5 (about $400) uses a 1/2.3 CMOS sensor

- Panasonic GH5 mirrorless DSLR (about $2,000) 4/3 CMOS sensor

UltraHD (Ultra High-Definition) refers to any format from 4K to above.

It's possible to produce and deliver content at even much higher resolutions. (E.g. 10k 10328 x 7760 pixels). Here's a link to a 10k timelapse video shot in Brazil.

Metadata

Metadata (data about the data) is embedded text and numeric information about the clip or program. It can include, but is not limited to:

- timecode

- camera

- exposure

- gain

- clip name

- clip content

- running time / duration

- latitude/longitude

- audio levels

- DRM (digital rights management)

It can be stored and accessed in XMP (stands for Extensible Metadata Platform and is based on XML). While data can be embedded in XMP, some media formats do not allow for this so data is written to a separate sidecar file.

This is why it's important to keep the directory structure found on Canon DSLRs and in Sony XDCam storage devices. Key information (such as timecode) is often stored in a separate file.

Closed-captioning is one type of metadata that can be displayed on screen for the hearing-impaired. Carried in the vertical blanking interval, the FCC mandates that all stations broadcast programming with closed captioning data. If you watch closed-captionined programming, you'll see a variety of variations in readability, placement and duration.

Good metadata overview by Philip Hodgetts: http://www.youtube.com/watch?v=GnPzpPvoyLA

Vocabulary (Know these terms)

- 2K

- 4K

- 4:4:4, 4:2:2, 4:1:1, 4:2:0

- Broadcast safe levels

- Closed Captioning

- Codec (short for coder/decoder)

- Color Correction

- Color Sampling/Subsampling

- Digital Cinema

- Digital Cinematography

- LUT

- Metadata

- Telecine

- Transcode

- Ultra HD

Final Test Review

Final Exam is worth 70 points! The best way to review for it is to study the class notes and the midterm (expect everything you got wrong on the midterm to be on the final). The final will be true/false, multple choice, and short answer. It will cover the following areas:

- Shooting/Editing Techniques

- Cybercollege editing guidelines

- Edits work best when motivated

- Whenever possible cut on subject movement.

- Keep in Mind the Strengths and Limitations of the Medium (TV is a close-up medium)

- Cut away from the scene the moment the visual statement has been made.

- Emphasize the B-Roll

- If in doubt, leave It out

- Continuity

- Acceleration editing

- Expanding time

- Causality & Motivation (Must have in order to be successful)

- Relational editing (Shots gain meaning when juxtaposed with other images. Pudovkin's experiment)

- Thematic editing (montage)

- Parallel editing

- What is Color correction vs color grading?

- What is a LUT?

- What are broadcast safe levels?

- Cybercollege editing guidelines

- Cameras

- Imaging devices: CCDs and CMOS

- What do our cameras use?

- zebra stribes - What are they good for? What would you set them for?

- Gain - What does turning it up do to audio/video?

- Shutter speeds - Why would you change this?

- Lenses

- Depth of Field - what affects this?

- Rack focus - How can you achieve this?

- Angle of view & focal length - How are they related?

- f-stops - Know your f-stops & what they mean

- ND filters - What are they good for?

- Audio

- Micsrophones (polar pickup patterns and types)

- Hz , kHz, CPS, dB

- Balanced vs Unbalanced cables/circuits

- Line level vs. Mic level

- Graphics (Review Jim's Graphic Tips)

- Lighting

- types of lighting instruments

- color temp

- HMI

- Lux vs footcandles

- soft vs hard key

- broad vs narrow lighting

- Video signal / technology

- ATSC, NTSC v DTV, SDTV & HDTV

- HDTV pixel dimensions (1920 x 1080 or 1280 x 720)

- Progressive v Interlace video

- Metadata

- Color Space: RGB vs Color Difference (Y,Cb,Cr)

- Digital Cinema vs Digital Cinematography

- timecode (difference between drop & non-drop)

- waveform monitors & vectorscopes

(What do they show?)

- what are the important IRE levels?

- Video Containers/Wrappers vs.video codecs

- Color sampling (4:4:4 v 4:2:2 v 4:1:1 v 4:2:0)

- 3-2 pulldown

Back to Jim Krause's Summer P351 Home Page